At Coherence, we're on a mission to make AI conversations not just intelligent, but visually rich. When we set out to build our chat SDK, we quickly realized that text-only responses were leaving value on the table. Modern AI agents analyze data, generate insights, and identify patterns - but forcing them to describe charts and graphs in words is like asking someone to explain a sunset over the phone.

The challenge? How do you seamlessly integrate interactive data visualizations into a streaming chat interface without compromising performance, breaking the conversation flow, or requiring developers to write complex visualization code?

Early in development, we faced a fundamental architectural choice that would define our entire approach. Should charts be generated server-side in a sandboxed environment, or should we push the rendering responsibility to the client?

We initially explored server-side chart generation using containerized rendering environments. The appeal was obvious: complete control over the rendering pipeline, consistent output across all clients, and the ability to leverage powerful server resources for complex visualizations. We prototyped solutions using headless browsers in Docker containers, generating static images and SVGs that could be embedded directly into chat responses.

However, this approach revealed several critical limitations:

Latency bottlenecks: Every chart required a full server round-trip, adding 200-500ms to response times - enough to break the conversational flow we were trying to preserve.

Limited interactivity: Static images meant no zooming, hover effects, or dynamic filtering - features users increasingly expect from modern data visualizations.

Scalability concerns: Server-side rendering doesn't scale linearly. Each chart generation consumed significant CPU and memory resources, creating potential bottlenecks during peak usage.

Deployment complexity: Managing sandboxed rendering environments across different deployment targets added operational overhead that would burden our users.

Instead, we opted for a hybrid approach that leverages client-side rendering with intelligent server-side preprocessing. Here's why this architecture won out:

Real-time responsiveness: Charts render as data streams in, creating a fluid experience where visualizations build progressively alongside the AI's response.

Rich interactivity: Full access to modern web APIs enables features like real-time filtering, smooth animations, and responsive design that adapts to different screen sizes.

Horizontal scalability: Rendering load distributes across all connected clients rather than concentrating on our servers, allowing us to scale to thousands of concurrent conversations without infrastructure bottlenecks.

Developer flexibility: Our SDK can adapt to different frontend frameworks and styling systems, rather than forcing developers to work with pre-generated static assets.

The key insight was that we could maintain the benefits of server-side processing - data validation, security, and consistent formatting - while pushing the computationally intensive rendering work to clients that are already optimized for interactive graphics.

This post details our technical approach to automatic chart detection, extraction, and rendering in real-time chat streams. We'll cover how we built a system that can:

If you're building conversational AI, working with streaming data visualization, or curious about multimodal chat interfaces, this deep dive will show you exactly how we solved these challenges - and the tradeoffs we made along the way.

Picture this scenario: A user asks their AI assistant, "How did our sales perform last quarter compared to our projections?"

Without visualization, the response might be:

> "Sales in Q1 were $1.2M against a projection of $1.5M, Q2 saw $1.8M against $1.6M projected, Q3 achieved $2.1M versus $1.9M projected..."

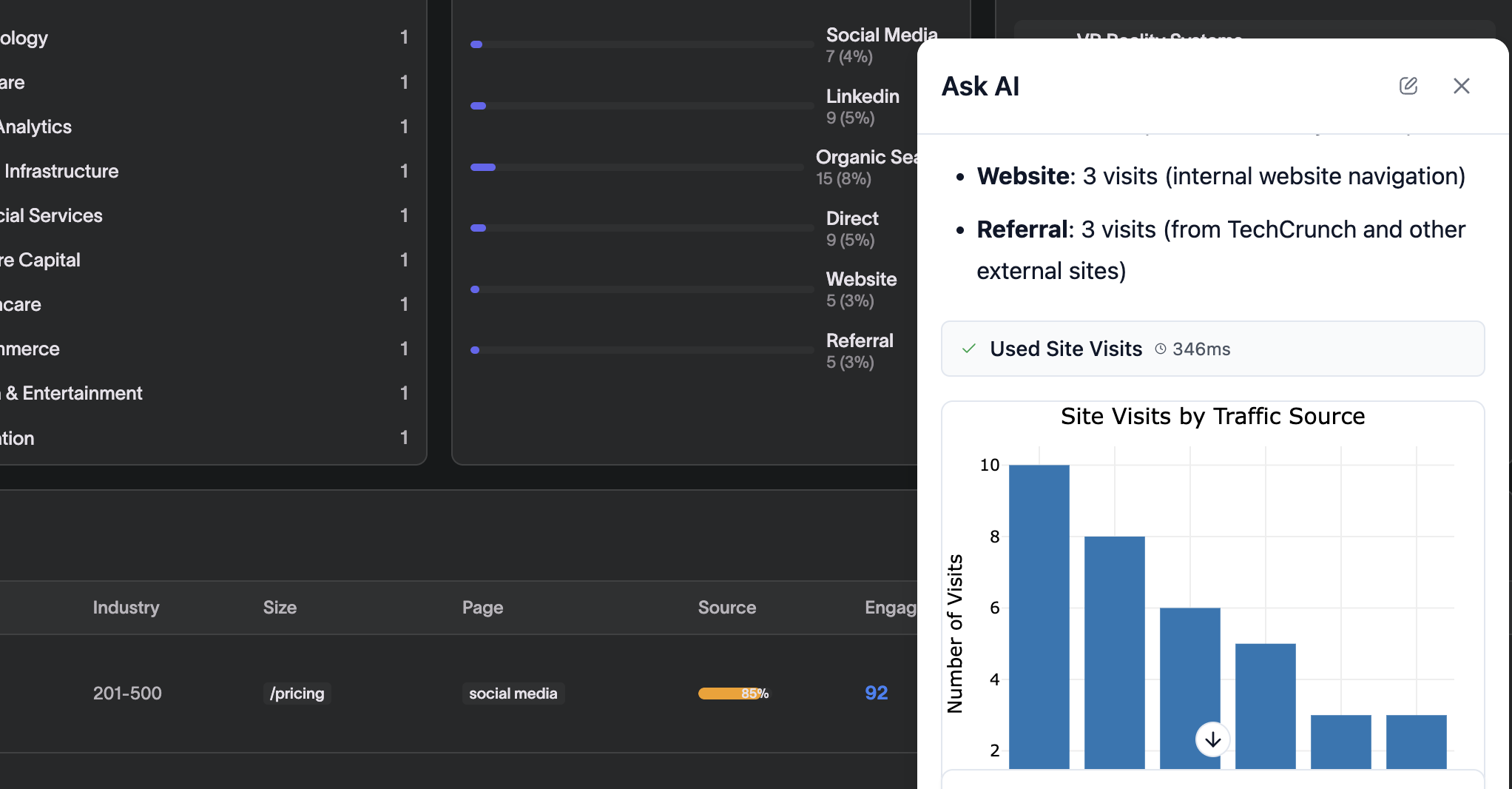

With our chart integration, the same data transforms into an interactive (HTML) bar chart that appears inline with the explanation, making trends instantly visible and insights actionable.

Our breakthrough came from treating charts as first-class citizens in the message stream. Here's how it works:

1. **Smart Detection During Streaming**: As the LLM generates responses, our `ChartExtractor` monitors for special delimiters

2. **Zero-Lag Rendering**: Text streams to the user immediately, while charts are detected and processed in parallel

3. **Graceful Degradation**: If chart rendering fails, the conversation continues uninterrupted

# From our ChartExtractor implementation

CHART_START_DELIMITER = "<!--CHART_START-->"

CHART_END_DELIMITER = "<!--CHART_END-->"

def contains_chart_delimiter(content: str) -> bool:

"""Check if content contains the chart start delimiter."""

return ChartExtractor.CHART_START_DELIMITER in contentThe trickiest part? Handling real-time streaming. When an LLM is generating a response token by token, you can't wait for the entire message to complete before rendering charts. Our solution uses a clever buffering strategy:

# Simplified from our StreamingCallback

if not self.chart_detected and ChartExtractor.contains_chart_delimiter(accumulated_content):

# Split content at delimiter

pre_chart, _ = ChartExtractor.split_at_chart_delimiter(accumulated_content)

# Send any pre-chart content immediately

stream_handler.send_chunk(pre_chart)

# Mark that we're now in chart territory

self.chart_detected = TrueThis approach ensures users see text immediately while charts load asynchronously - the best of both worlds.

Our `ChartExtractor` employs multiple detection strategies to maximize compatibility:

Here's your sales analysis...

<!--CHART_START-->```chart

{

"type": "bar",

"series": [{

"name": "Actual Sales",

"data": [{"x": "Q1", "y": 1200000}, {"x": "Q2", "y": 1800000}] }],

"layout": {"title": "Quarterly Sales Performance"}

}```

<!--CHART_END-->The extractor also recognizes standard JSON code blocks marked with `json` or `chart` language identifiers.

For maximum flexibility, we support inline chart definitions: `<!-- CHART: {...} -->`

Another optimization we decided to implement is lazy-loading visualization libraries. Your bundle stays lean - we only load Plotly, Chart.js, or other libraries when actually needed:

This approach keeps initial page loads fast while providing rich visualization capabilities on demand.

Let's walk through a concrete example. A SaaS platform integrates Coherence to add conversational analytics:

"Show me user engagement trends for the past 6 months broken down by plan type"

```I've analyzed your user engagement data for the past 6 months. Here are the key findings:

1. **Premium users** show consistently higher engagement, averaging 82% weekly active users

2. **Standard plan** engagement has grown from 45% to 58% - a positive trend

3. **Free tier** remains stable around 31% weekly active

<!--CHART_START-->```chart{ "type": "line", "series": [ { "name": "Premium Plan", "data": [ {"x": "Jan", "y": 79}, {"x": "Feb", "y": 81}, {"x": "Mar", "y": 83}, {"x": "Apr", "y": 82}, {"x": "May", "y": 84}, {"x": "Jun", "y": 82} ] }, { "name": "Standard Plan", "data": [ {"x": "Jan", "y": 45}, {"x": "Feb", "y": 48}, {"x": "Mar", "y": 51}, {"x": "Apr", "y": 54}, {"x": "May", "y": 56}, {"x": "Jun", "y": 58} ] }, { "name": "Free Tier", "data": [ {"x": "Jan", "y": 32}, {"x": "Feb", "y": 30}, {"x": "Mar", "y": 31}, {"x": "Apr", "y": 31}, {"x": "May", "y": 30}, {"x": "Jun", "y": 31} ] } ], "layout": { "title": "Weekly Active Users by Plan Type (%)", "xaxis": {"title": "Month"}, "yaxis": {"title": "Weekly Active %"} }}```<!--CHART_END-->

The upward trend in Standard plan engagement suggests your recent feature releases are resonating with this segment.```Users see the text explanation immediately as it streams, followed by an interactive line chart that appears inline. They can hover for exact values, toggle series on/off, and even zoom - all without leaving the chat interface.

Our chart schema (`ChartMetadata`) supports everything from simple bar charts to complex 3D visualizations:

```python

class ChartType(str, Enum):

LINE = "line"

BAR = "bar"

PIE = "pie"

SCATTER = "scatter"

AREA = "area"

HEATMAP = "heatmap"

SCATTER_3D = "scatter3d"

# ... and more

```The schema is designed to be library-agnostic. Whether you prefer Plotly's powerful 3D capabilities or Chart.js's simplicity, the same chart definition works across libraries.

1. **Streaming Buffer Management**: We carefully manage partial delimiter detection to avoid showing incomplete markers to users

2. **Smart Caching**: Chart configurations are cached client-side to avoid re-parsing identical visualizations

3. **Responsive Sizing**: Charts automatically adapt to container dimensions with optimized layouts for both inline and expanded views

4. **Memory Management**: Proper cleanup of chart instances prevents memory leaks in long conversations:

```javascript

// Cleanup when component unmounts

if (window.Plotly && window.Plotly.purge) {

window.Plotly.purge(portalTargetRef.current);

}

```Users don't want to wait. By showing text immediately and loading charts asynchronously, we maintain the conversational flow while adding rich visualizations.

Network issues, parsing errors, or unsupported chart types should never break the conversation. Every visualization has a text fallback.

We inject carefully crafted instructions into system prompts to guide LLMs on when and how to generate charts:

```python

def inject_chart_instruction(system_prompt: str) -> str:

"""Add instructions about generating charts."""

chart_instruction = """ When presenting data that would benefit from visualization:

1. Provide your text response first

2. Then add a chart delimiter: <!--CHART_START-->

3. Include the chart JSON in a code block

4. End with: <!--CHART_END-->

"""

return system_prompt + chart_instruction

```Supporting multiple visualization libraries means developers can use their preferred tools without changing their integration.

We're already working on the next generation of conversational visualizations:

Charts that update live as new data arrives, perfect for monitoring dashboards and live analytics.

Click a chart element to ask follow-up questions about that specific data point.

As spatial computing grows, we're exploring 3D visualizations that users can manipulate in space.

Multiple users annotating and discussing the same visualization in real-time.

Ready to add intelligent, visual conversations to your application? Here's how to get started:

1. **Sign up for Coherence** on our app- you'll have charts in your chat in under an hour

2. **Experiment with our Demo** - See chart generation in action with various data types and visualization styles here

3. **Check the SDK Docs** - Detailed examples of chart customization and advanced features on our docs

By treating data visualization as a core part of the conversation - not an afterthought - we've unlocked new possibilities for how users interact with AI. Complex data becomes instantly understandable. Trends jump off the screen. Insights emerge naturally.

This is just the beginning. As AI agents become more sophisticated in their analytical capabilities, the ability to communicate visually becomes not just useful, but essential. We're building the infrastructure to make that future accessible to every developer.

---

*Have questions about implementing charts in your conversational AI? Want to share your own approaches to multimodal interfaces? Find me on [Twitter](https://twitter.com/zacharyzaro) or drop us a line at [support@withcoherence.com](mailto:support@withcoherence.com). We'd love to see what you build!*